[Russ Maschmeyer] and Spatial Commerce Projects developed WonkaVision to demonstrate how 3D eye tracking from a single webcam can support rendering a graphical virtual reality (VR) display with realistic depth and space. Spatial Commerce Projects is a Shopify lab working to provide concepts, prototypes, and tools to explore the crossroads of spatial computing and commerce.

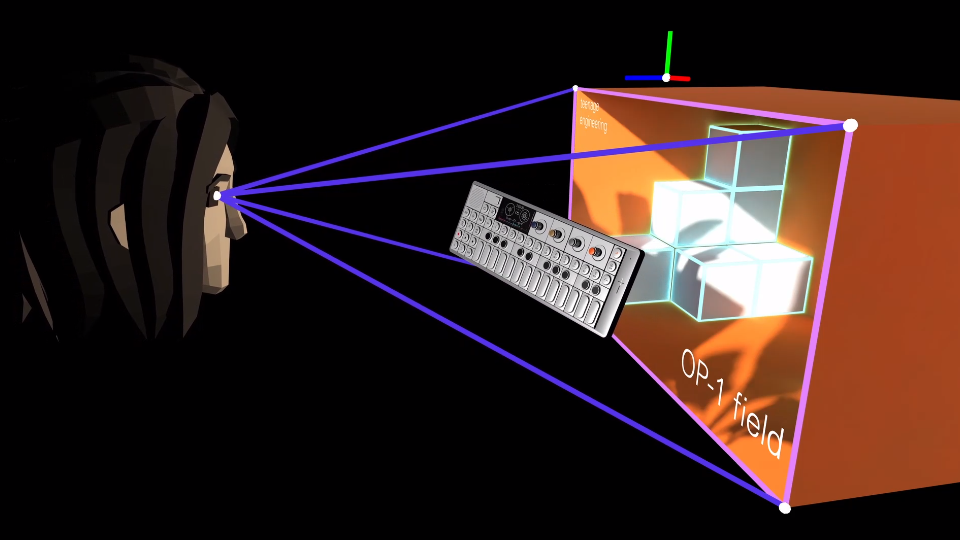

The graphical output provides a real sense of depth and three-dimensional space using an optical illusion that reacts to the viewer’s eye position. The eye position is used to render view-dependent images. The computer screen is made to feel like a window into a realistic 3D virtual space where objects beyond the window appear to have depth and objects before the window appear to project out into the space in front of the screen. The resulting experience is like a 3D view into a virtual space. The downside is that the experience only works for one viewer.

Eye tracking is performed using Google’s MediaPipe Iris library, which relies on the fact that the iris diameter of the human eye is almost exactly 11.7 mm for most humans. Computer vision algorithms in the library use this geometrical fact to efficiently locate and track human irises with high accuracy.

Generation of view-dependent images based on tracking a viewer’s eye position was inspired by a classic hack from Johnny Lee to create a VR display using a Wiimote. Hopefully, these eye-tracking approaches will continue to evolve and provide improved motion-responsive views into immersive virtual spaces.

Source: https://hackaday.com/2023/03/12/immersive-virtual-reality-from-the-humble-webcam/