There are over 2 Billion websites and over 50 billion web pages on the internet. All of them contain information in different formats, text, video, images or tables.

If you ever want to scrape data from a webpage to excel, the easiest option is copy pasting the webpage content. But it’s the best way to do it as the data would not be formatted properly. (The time spent in making the data usable is considerable).

That’s where web scraping comes in. Web scraping converts unstructured website data into a structured excel format in seconds while saving you time & effort.

In this blog, we’ll explore three ways to scrape data from websites and download it to Excel. Whether you’re a business owner, analyst, or data enthusiast, this blog will provide the tools to effectively scrape data from websites and turn it into valuable insights.

3 ways to scrape data from website to excel

We will deep dive into these three ways to scrape data from website to excel.

- Using automated web scraping tool

- Using Excel VBA

- Using Excel Web Queries

Using an automated web scraping tool

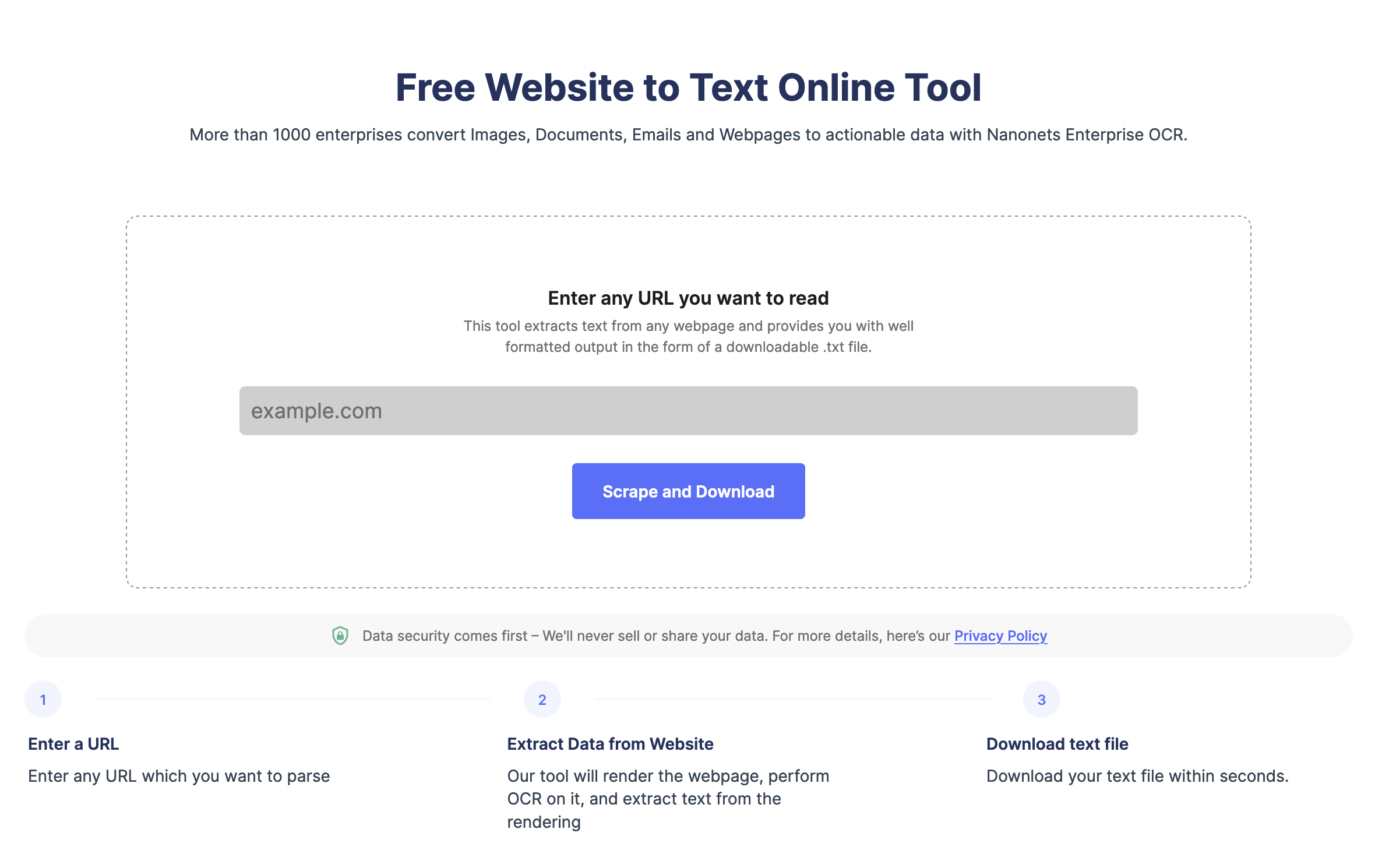

If you want to instantly scrap webpage information to excel, you can try a no-code tool like Nanonets website scraper. This free web scraping tool can instantly scrape website data and convert it into an excel format.

Here are three steps to scrape website data to excel automatically using Nanonets:

Step 1: Head over to Nanonets website scraping tool and insert your URL.

Step 2: Select Scrape and Download and wait.

Step 3: The tool downloads a file with webpage data automatically.

Using Excel VBA

Excel VBA is quite powerful and can automate many complex tasks easily. Let’s see the steps to use it to scrape a website page.

Step 1: Open Excel and create a new workbook.

Step 2: Open the Visual Basic Editor (VBE) by pressing Alt + F11.

Step 3: In the VBE, go to Insert -> Module to create a new module.

Step 4: Copy and paste the following code into the module:

Sub ScrapeWebsite() 'Declare variables

Dim objHTTP As New WinHttp.WinHttpRequest

Dim htmlDoc As New HTMLDocument

Dim htmlElement As IHTMLElement

Dim i As Integer

Dim url As String 'Set the URL to be scraped

url = "https://www.example.com" 'Make a request to the URL

objHTTP.Open "GET", url, False

objHTTP.send 'Parse the HTML response

htmlDoc.body.innerHTML = objHTTP.responseText 'Loop through the HTML elements and extract data

For Each htmlElement In htmlDoc.getElementsByTagName("td") 'Do something with the data, e.g. print it to the Immediate window

Debug.Print htmlElement.innerText

Next htmlElement

End SubStep 5: Modify the URL in the code to the website you want to scrape.

Step 6: Run the macro by pressing F5 or by clicking the “Run” button in the VBE toolbar.

Step 7: Check the Immediate window (View -> Immediate Window) to see the scraped data.

What should you consider while using VBA to scrape data from a webpage?

While Excel VBA is a potent tool for scraping webpages, there are several drawbacks to consider:

- Complexity: VBA can be complex for non-coders. This makes it difficult to troubleshoot issues.

- Limited features: VBA can extract limited data types. It can’t extract data from complex HTML structures.

- Speed: Excel VBA can be slow while scraping large websites.

- IP Blocking Risks: There is always a risk of IP getting blocked when scraping large data websites.

💡

Overall, while VBA can be a useful tool for web scraping, it is important to consider the above drawbacks and weigh the pros and cons before using it for a particular scraping project.

Using Excel Web Queries

Excel web queries can scrape web pages easily. Basically it imports web pages as text file into Excel. Let’s see how to use excel web query to scrape web pages to excel.

Step 1: Create a new Workbook.

Step 2: Go to the Data tab on the top. Click on the “Get & Transform Data”section and then go to “From Web”

Step 3: Enter the URL in the “From Web” dialog box.

Step 4: Click “OK” button to load the webpage into the “Navigator” window.

Step 5: Select the table or data you want to scrape by checking the box next to it.

Step 6: Click on the “Load” button to load the selected data into a new worksheet.

Step 7: If needed, repeat the above steps to scrape additional tables or data from the same webpage.

Step 8: To refresh the data, simply right-click on the data in the worksheet and select “Refresh”.

- Web queries can’t scrape data from dynamic webpages or webpages with complex HTML structures.

- Web queries rely on the webpage HTML structure. If it changes, the web query may fail or extract incorrect data.

- Web queries can extract unformatted data like data may be extracted as text instead of a number or date.

The Excel tools like VBA and web query can extract webpage data but they often fail for complex webpage structures or might not be the best choice if you have to extract multiple pages every day. It’s a lot of manual effort to paste the URL, check the extracted data, clean it, and store it.

Platforms like Nanonets can help you automate the entire process in a few clicks. You can upload the list of URLs into the platform. Nanonets will save tons of your time by automatically:

- Extracting data from the webpage – Nanonets can extract data from any webpage or headless webpages with complex HTML structures and more.

- Structuring the data – Nanonets can identify HTML structures and format the data to retain table structures, font and more so you don’t have to.

- Performing Data cleaning – Nanonets can replace missing data points, format dates, replace currency symbols, or more in seconds using automated workflows.

- Exporting the data to a database of your choice – You can export the extracted data to Google Sheets, Excel, Sharepoint, CRM or any other database of your choice.

If you have any requirements, you can contact our team, who will help you set up automated workflows to automate every part of the web scraping process.

Source: https://nanonets.com/blog/scrape-data-from-website-to-excel/